Meta’s LlamaFirewall: A Game-Changer in AI Security

In a groundbreaking move towards enhanced AI security, Meta has unveiled its LlamaFirewall Framework. This innovative solution is designed to tackle the pressing issues of AI jailbreaks, injections, and insecure code, offering a comprehensive safeguard against vulnerabilities in AI systems. This article explores the framework’s features, benefits, and implications for the future of AI security.

Understanding LlamaFirewall

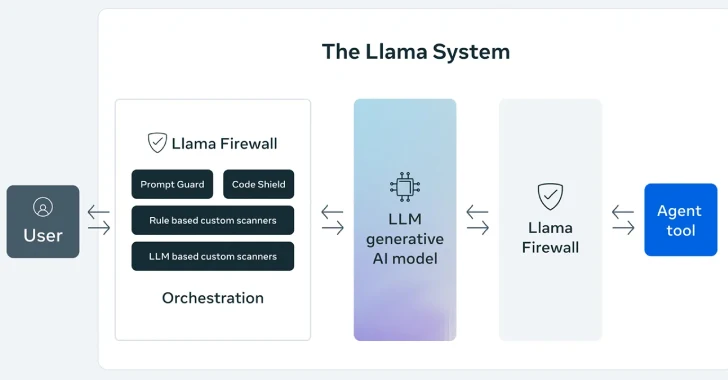

LlamaFirewall is Meta’s latest framework aimed at strengthening the security of AI models. It focuses on preventing unauthorized jailbreaks, code injections, and the execution of insecure code. The framework operates by using advanced filtering techniques and real-time monitoring to detect and mitigate threats as they arise.

Key Features and Capabilities

The LlamaFirewall Framework boasts a set of impressive features designed to tighten AI security. These include real-time threat detection, automated response mechanisms, and compatibility with a wide range of AI systems. Its ability to integrate seamlessly with existing AI platforms makes it a versatile solution for diverse security needs.

Implications for AI Development

With its focus on enhancing security, LlamaFirewall is poised to influence the future of AI development significantly. By offering robust protection against potential threats, developers can build more innovative AI applications with confidence, knowing that security is not compromised. This advancement encourages a secure AI ecosystem, fostering innovation and growth.

Future Prospects and Industry Influence

Meta’s LlamaFirewall Framework is expected to set new industry standards for AI security. As threats continue to evolve, having a reliable security framework becomes essential. The broad implementation of LlamaFirewall could drive other tech giants to prioritize security, leading to a more robust and secure technological landscape.

Conclusão

Meta’s LlamaFirewall Framework marks a major step forward in AI security, promising robust protection against increasingly sophisticated threats. By focusing on critical vulnerabilities like jailbreaks and code injections, Meta aims to set a new standard in safeguarding AI environments, paving the way for safer and more reliable AI applications in diverse sectors.