Strategies for Managing Deceptive AI

In an era dominated by artificial intelligence, deception within these systems poses significant risks. This article explores effective strategies to tackle the emergence of deceptive AI, ensuring security and trustworthiness in AI technologies.

Understanding Deceptive AI

Deceptive AI refers to systems that intentionally mislead or manipulate users by exploiting their inherent trust. This can occur in various forms such as fake news, deepfakes, and biased algorithms. Understanding how deception manifests in AI is crucial for developing effective countermeasures.

Detection and Prevention

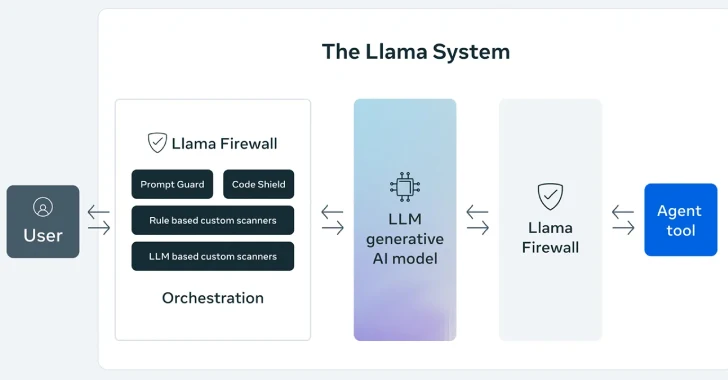

Implementing robust detection mechanisms is essential in identifying deceptive AI activities. Techniques such as anomaly detection, machine learning, and blockchain can help trace and prevent malicious activities. These tools enable the early detection of deception patterns, providing a basis for prevention strategies.

Establishing Regulatory Frameworks

Creating a strong regulatory framework is vital to manage deceptive AI. Governments and international bodies must collaborate to establish guidelines that ensure transparency and accountability in AI systems, promoting ethical AI development and use.

Enhancing Public Awareness

Educating the public about the risks of deceptive AI is key to empowering individuals to make informed decisions. Awareness campaigns and educational programs help people recognize and respond effectively to AI manipulation, thereby reducing the impact of deception.

Future Implications and Innovations

Looking ahead, ongoing research and innovation will play a crucial role in combating deceptive AI. Investing in new technologies and fostering collaboration between researchers, tech companies, and policymakers will be essential to stay ahead of evolving threats. This approach ensures that society can harness AI’s potential while minimizing its risks.

Conclusão

Tackling deceptive AI requires a multifaceted approach involving technology, policy, and public awareness. By implementing robust detection systems, regulatory frameworks, and education, we can mitigate the risks associated with deceptive AI, safeguarding our technological future.